The problem is analogous to that of Microsoft's Internet Information Services (IIS). IIS is both a web server and an application server. So, in a chart, it would line up against the Apache and Sun web servers. But, it would also line up against Tomcat, WebSphere and WebLogic in the application server category. This same phenomenon occurs often with Identity Management products. Some products have single capability and others have multiple. Some companies offer a single product and others offer multiple. So, it becomes very difficult to compare apples to apples.

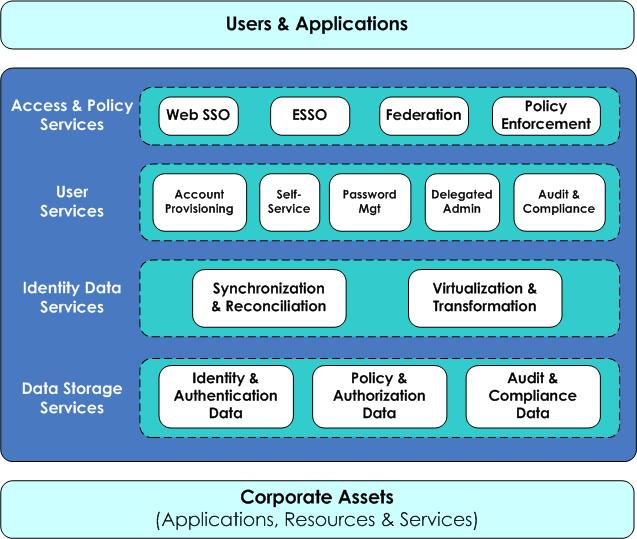

In protest of all this confusion, I continue to attempt to simplify the IdM landscape. A while ago, I tried to create an identity services architecture map that could be used as a visual aide when communicating about where products fit in to an overall IdM architecture. While not 100% satisfied with it, I do think it serves the basic purpose.

Lately though, I've been re-thinking Web SSO and Enterprise SSO. What I've found is that people tend to group them together. However, at their core these are two very different technologies.

Web SSO is about securing access. It's generally implemented as a web server filter that intercepts access requests and makes decisions about whether to grant or deny access to the requested resource for the requesting party. Strong Web SSO products typically include a mechanism to manage web resources and user access permissions.

ESSO, on the other hand, is about enabling a better end-user experience. Typically, ESSO is implemented as a desktop client that manages user credentials on behalf of a single user. There are some ESSO implementations that act as an access proxy somewhere on the network, but this functionality isn't core to ESSO capabilities. The main driver of ESSO tends to be end-user experience and not security. In fact, I often hear the ESSO question from customers posed something like this:

We want ESSO to make users' lives easier, but we're afraid of the security implications. If a password is compromised, an attacker would now have access to the entire kingdom.My answer is that this problem isn't with ESSO -- it's a problem with the authentication mechanism. Two-factor authentication would prevent an attacker from accessing anything with just a password compromise. And ESSO enables strong password policies on applications throughout the enterprise, which means passwords are much less likely to become compromised in the first place. If users don't ever need to remember or manually enter their passwords into systems, you could reasonably require 10 character passwords which require upper, lower, numeric and special characters. And you can force a password change every 30 days (this can also be transparent to the end-user). This creates a password that's much more difficult to guess or brute-force attack than a typical user's 6- or 8- digit alpha-numeric password.

So, I guess I'm thinking that I might move ESSO out of the Access Services layer and into the User Services layer. It's really not doing any enforcement and it's not managing policies or access rights. It's really only fostering a better user experience in a secure way.

And let's just all start ignoring the term Reduced SSO. This term just acknowledges that not every enterprise system can participate in an SSO implementation. Is this really necessary to distinguish? We're looking to achieve single sign on for participating applications. Let's not confuse customers further with an additional term that only points out what should already be obvious. If we can make this all simpler, I think we'll see higher adoption (and success) rates and happier customers.